NOTES

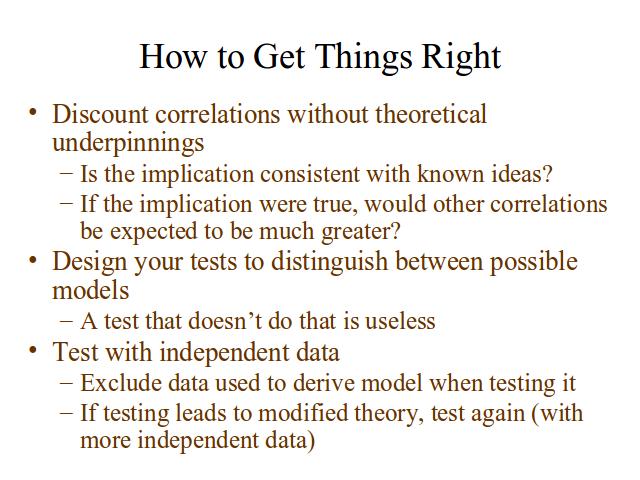

Excluding the data that derived the model is very important - ideally you should use only new data to test the theories you've come up with using other data. Comparing a theory to the data used to derive it doesn't tell anything about how good the data is at predicting, vice explaining.

The audience got very involved at this point and we discussed various examples of how to get things right.

For example, I discussed the difference between finding in the data the fact that Israeli fighter-pilots tend to father many more daughters than sons (and I have read of a study that shows that), and having a theory that predicts a correlation between occupations that expose men to high acceleration and going to the data to find out if the predicted correlation exists. In the first case, you have something that may be a coincidence, born of the fact that if you look at a hundred possible correlations, 5 of them will show significance at the 5% level, simply by chance, while in the second case, you have an idea that can be tested by looking at fighter pilot data, astronaut data, and the like.

Someone in the audience wished to defend conclusions drawn from particular incidents - I expressed some sympathy with that idea, saying that an in-depth investigation into a particular incident is a case study, not an anecdote, and such case study data can be very valuable. Ideally, of course, case studies should help suggest which statistics to collect next (and statistics should suggest good ideas for case studies).